Recently, artificial intelligence, especially tools like ChatGPT, Gemini, and Copilot, have become not only the focus of interest for technology enthusiasts but also the target of scammers who exploit its popularity to spread malware and phishing. This trend is worrying, as the increasing interest in AI also increases the number of people who fall for false promises of easy profit or problem-solving using these advanced technologies.

Recently, artificial intelligence, especially tools like ChatGPT, Gemini, and Copilot, have become not only the focus of interest for technology enthusiasts but also the target of scammers who exploit its popularity to spread malware and phishing. This trend is worrying, as the increasing interest in AI also increases the number of people who fall for false promises of easy profit or problem-solving using these advanced technologies.

1. Spreading malware through fake AI tools

Scammers often create fake applications that promise access to advanced AI tools, but in reality, they contain malware. These fake applications spread, for example, through social media ads or as attachments in emails that claim to offer exclusive access to popular AI tools. Once the user downloads and runs such an application, their device may be infected with malware that can steal sensitive information such as passwords or financial data (Dvojklik.cz, 2023; Trend Micro, 2023).

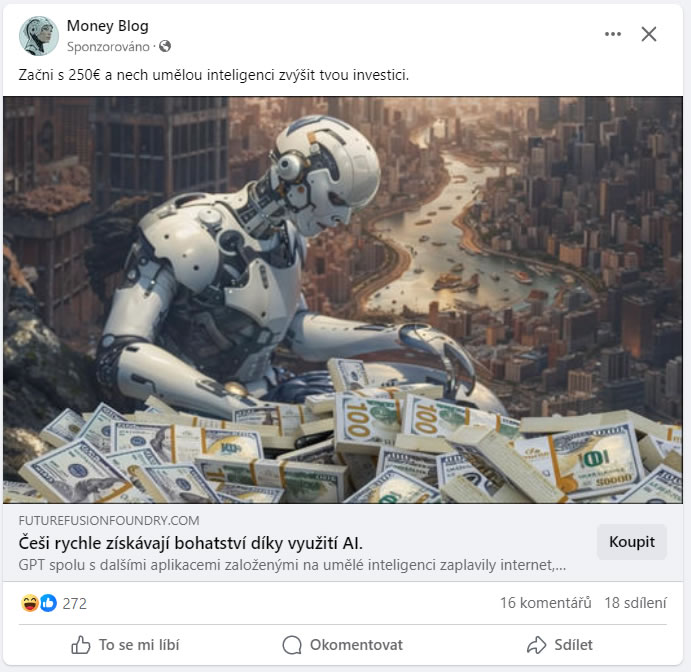

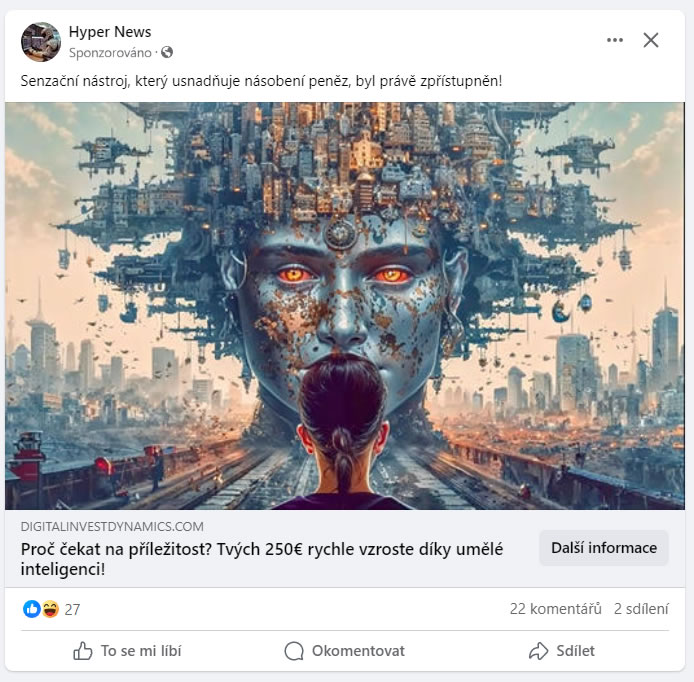

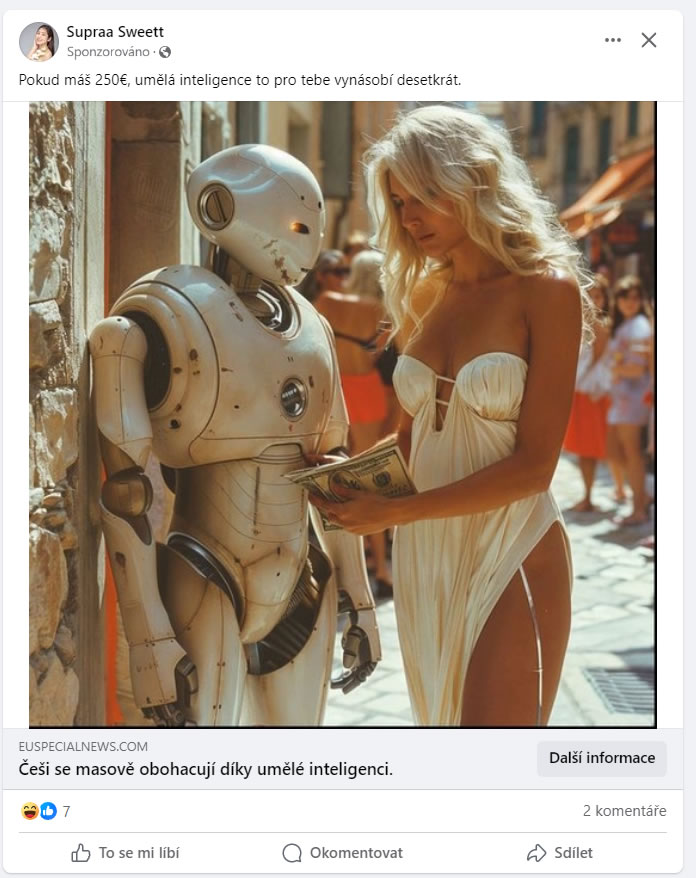

2. Phishing campaigns and fake promises of miraculous wealth

Another common way scammers exploit the popularity of AI is by creating phishing campaigns that lure victims with promises of quick wealth using AI. Phishing emails or messages on social networks often contain links that lead to fake websites where users are required to enter their personal or payment information. These websites may look very convincing and are designed to mimic trustworthy platforms (The Verge, 2023; WeLiveSecurity, 2023).

3. Exploiting social networks to promote scams

Social networks play a key role in spreading these scams. Scammers use advertising systems and through fake advertisements often promise that thanks to AI, anyone can earn millions. In reality, advertising banners lead to sites where users become victims of phishing or are prompted to download malware (WeLiveSecurity, 2023).

How to protect yourself from these scams

To avoid these pitfalls, it is important to follow a few basic rules:

A. Do not download software from untrusted sources. If an application promising AI features catches your eye, make sure it comes from a trusted source.

B. Beware of offers that are too good to be true. If something sounds too good to be true, it is probably a scam.

C. Verify the websites you click on. If an email or social media message offers you a link to "exclusive" content, make sure the link leads to a secure site.

For E-Bezpečí,

Kamil Kopecký

Sources:

Dvojklik.cz. ChatGPT as Bait: How Attackers Exploit Popular AI Tools.

Trend Micro. Beware of Fake AI Tools Masking Very Real Malware Threats.

The Verge. Meta's Warning: ChatGPT Malware is Targeting Business Accounts.

WeLiveSecurity. Beware Fake AI Tools Masking Very Real Malware Threat.